The usual purpose for log files is to serve as repository for historical events, and often handy when trying to debug complex problems. However, an additional use that log files can be used as a source of events, to “replay” a past scenario. In particular, the log files from a web server contain enough information (time, URL, etc.) to reconstruct a series of requests over time. With RedLine13 and “Log Replay” tests, you can use these log files to drive your load tests. In addition, you can run as many load generator instances as desired to scale your log replay test to nearly any level.

Getting Started

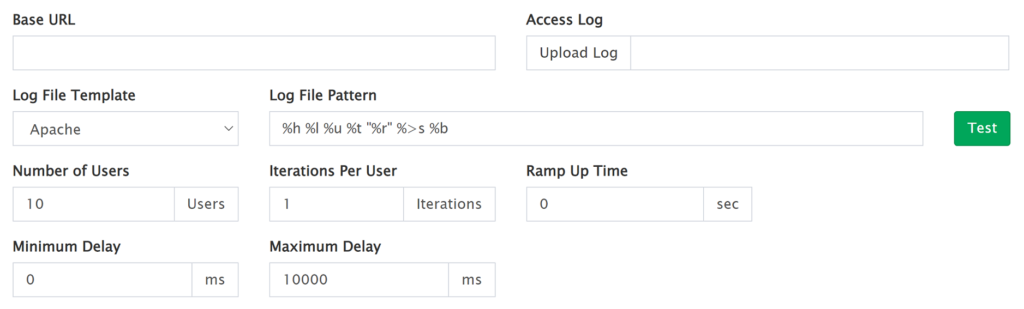

To run a Log Replay test on RedLine13, begin by logging into your account, clicking “Start Test“, and then navigating to the “Log Replay Test” tab:

Running Your First Log Replay Test

To successfully run a log replay load test, you will need at a minimum the following information:

- Base URL

Apache and NGINX log files will contain relative paths. You will need to specify a Base URL as this will not be contained within the useful portions of the log file. - Log File

You may upload your entire log file, or in a more likely scenario a sample from your log file. There are upload limits for attaching extra files with your test (which are described on our pricing and features page). This is uploaded within the “Access Log” field when creating your load test. - Log File Pattern

It is crucial that the Log File Pattern matches the data entries within your log file. When you select a test type, this will prepopulate with a default pattern, but you must check and potentially modify this in accordance with the Log Format Descriptions listed below. - Scaling Basics

We provide some default values for fields such as Number of Users and Iterations per User, however these are fields that you will likely want to configure to adjust how much load your test should generate. - Advanced Cloud Options

For larger tests and for finer control over resource allocation, you can expand the “Advanced Cloud Options” section which will allow you to specify number of servers, instance size, and other cloud configuration options.

You can initiate your test by going through the following steps:

- First make sure you have your AWS credentials configured properly. This is not an absolute prerequisite; however, it will allow you to configure load generator server sizes for your test.

- Specify a test configuration by filling out the required fields from the list above.

- Name your test.

- Click on “Start Test“.

Viewing Results for Your Test

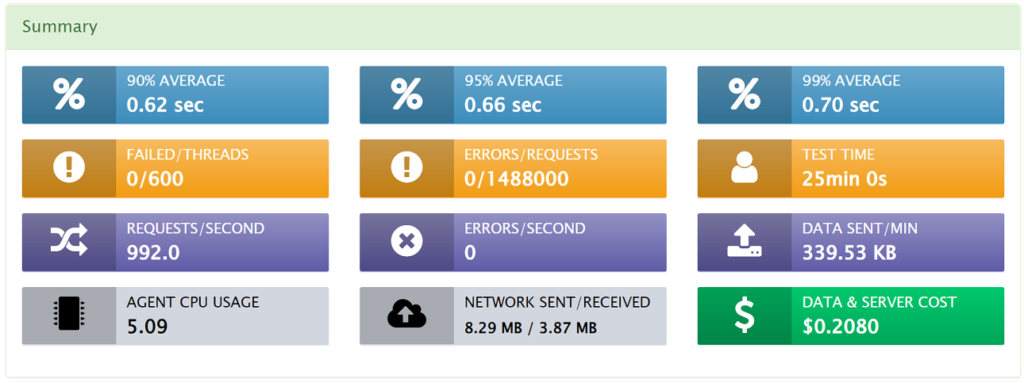

As your test begins, real time results will be displayed on the load test results page. These tables and graphs will update and finalize at the conclusion of your test. In addition to this, a test summary will be displayed after your test completes containing the most pertinent test information. Below is an example which is also covered in our documentation in more detail under “Test Summary“:

Field Descriptions

| Field | Description |

| Base URL | The base URL which is preprended to all requests found in the log file. (Log files do not tell us the sites URL.) |

| Access Log | The log file to parse, which can be Apache, NGINX, or in a plain list format. RedLine13 currently uses all the GET requests to generate traffic. |

| Log File Template | This is not a required field, however selecting Apache or NGNIX will populate the pattern with the default for Apache or NGINX. |

| Log File Pattern | The pattern to use to parse the request. The patterns available are defined below and in the open-source log file parser. |

| Number of Users | This will be the number of users to simulate in total across your cloud servers. Each user will simulate the entire log file, at least once. You may also set iterations per user as described below. |

| Iterations Per User | The number of iterations per user to simulate the entire log file. |

| Ramp up Time | This can be specified as the time to ramp up the users, otherwise they all start at the same time. We evenly ramp up the users based on the time specified per cloud server. |

| Minimum Delay | For each request within the log file what is the minimum time to wait before making the http or https request. |

| Maximum Delay | For each request within the log file what is the maximum time to wait before making the http or https request. |

| Save Response and Percentiles | This checkbox has two actions. The first is to save the response output, as we capture the content from the request. The second is to calculate performance percentiles after the test completes. |

Log Format Descriptions

The formatting conventions we use for parsing log files is based on the open-source Log File Parser project which you can find on GitHub by following the link. This is based off a log parser written by Rafael Kassner which is also available on GitHub here. Default patterns for Apache and NGINX are as follows:

| Apache | %h %l %u %t “%r” %>s %b |

| NGINX | %h %l %u %t “%r” %>s %O “%{Referrer}i” “%{User-Agent}i” |

For Apache log files, the following formatting expressions apply:

| %h | The http or https host. |

| %l | Log name. |

| %u | The request user. |

| %t | The request time. |

| “%r” | The actual request, which is wrapped in quotes. |

| %>s | Status, but multiple statuses can be read at this point. |

| %b | Response bytes. |

For NGINX log files, the following formatting expressions apply:

| %h | The http or https host. |

| %l | Log name. |

| %u | The request user. |

| %t | The request time. |

| “%r” | The actual request, which is wrapped in quotes. |

| %>s | Status, but multiple statuses can be read at this point. |

| %O | Response bytes sent. |

| “%{Referrer}i” | %{…}i will be a header that was written to the log file, in this case the referrer. |

| “%{User-Agent}i” | The user-agent header which is expected in the log file. |

Log File Sample

Using the above log format descriptions, we can consider the following log file sample. In this case the log excerpt has come from an NGINX log:

192.168.99.1 - - [02/Apr/2016:23:38:56 +0000] "GET /favicon.ico HTTP/1.1" 404 209 "https://192.168.99.101/" "Mozilla/5.0 (Macintosh; Intel Mac OS X 10_11_0) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/51.0.2695.1 Safari/537.36"This makes it easier to visualize where the above expressions will extract their corresponding data from each line within the log file. For instance, “%h” for the host would correspond to “https://192.168.99.101/“, whereas “%t” for the request time would correspond to “[02/Apr/2016:23:38:56 +0000]“, etc. Each line within the log file will be attempted for parsing as a single request.