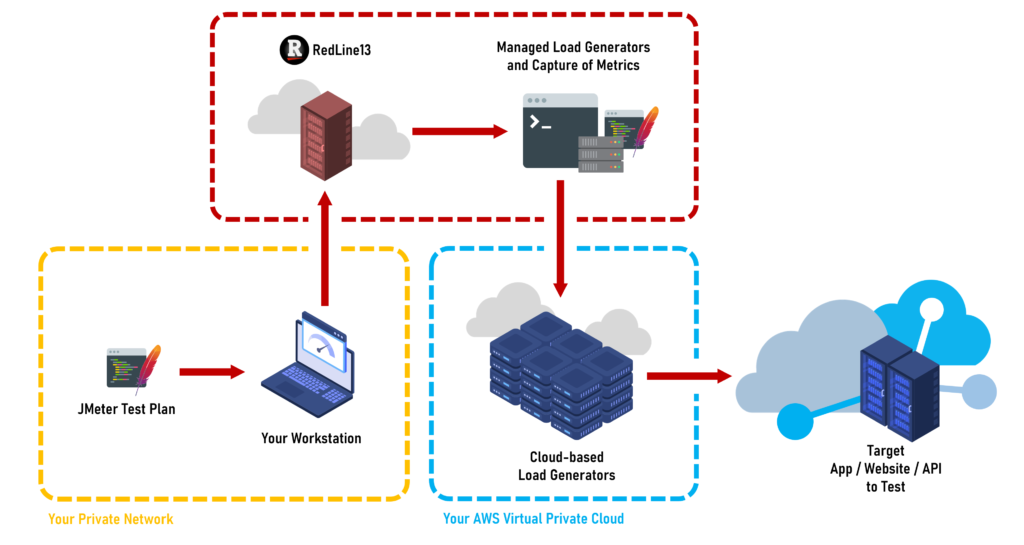

When you use RedLine13, there is a RedLine13 subscription cost and an AWS cost. RedLine13 generates the load on your AWS servers as you can see in the diagram below. AWS costs are already ridiculously low. One advantage of this is that you have complete control. If you’re running a low number of tests, your AWS costs will be correspondingly low. Another advantage of the load running on your servers, is that it is being billed directly to you so that you get whatever AWS discounts you get and no one is marking up the cost.

The bottom line is that using RedLine13 is the least expensive way to load test. RedLine13 subscription costs are clearly far lower than any other load testing tools. And this is also important – RedLine13 has significantly higher limits in the number of tests, size and length of tests, etc. Read more about RedLine13 pricing and limitations.

But what will your AWS costs be if you use RedLine13? This post will explain everything about AWS costs.

Data & Server Costs

One way to estimate the AWS cost for a new test is to run a small test to get actual costs and extrapolate to estimate the cost for the size and length of the test you plan to run. On the RedLine13 load test summary metrics you can find the green “Data & Server Cost” box. This approximates your projected AWS spend for your test.

Cost estimation formula

If you’d like to understand how we come up with the cost, read here. We have come up with a formula “template” to easily estimate the approximate AWS spend for any test run on RedLine13. We use the following information to perform these calculations.

| Server Price/Hr | Number of Servers | Data Sent (GB) |

$0.113

|

3

|

0.0022

|

To aid in illustrating, we have provided some sample values. With these numbers in hand, the estimated cost can be calculated as follows:

| (Server Price/Hr) x (Number of Servers) + (Data Sent in GB) x $0.09 |

Plugging in our sample values, we arrive at:

= ($0.113 x 3) + (0.0022 x $0.09) = $0.339 + $0.0002 ≈ $0.339

|

The total cost estimate works out to about 34 cents, and includes both the total EC2 hourly cost plus the total data upload cost for the entire test. In many cases including this example here, data upload is a negligible contributor to data costs. Furthermore, this cost represents the maximum that you can expect to be charged by AWS. Your actual billed charges will almost certainly be lower due to a multitude of factors, including free tier usage, intra-AWS data cost discounts and waivers, etc.

Estimating the AWS Cost for a New Test

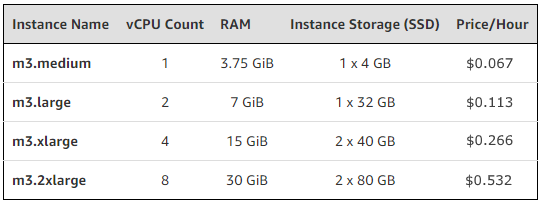

If you want to estimate the cost for your new test you can perform a hypothetical analysis of costs. This involves relatively known costs of EC2 load agent instances, plus a rough estimation of data transfer costs. First tackling the former using published AWS EC2 pricing tables, we can reference an excerpt below for some quick calculations:

Let us select a server size of m3.medium. In this scenario, we know that our test requires three of these instances, and will be run for a total duration of one hour. (For more insight on how to select instance sizes and number of instances, please refer to this post on vCPU determination, as well as this post on EC2 type selection.) We can roughly estimate the anticipated AWS bill for the load using the information known thus far:

$0.067 x 3 x 1(hr) = $0.201

|

Therefore, we would anticipate the EC2 portion of our costs to be about 20 cents using on-demand m3.medium instances. If we were willing to use spot instances as described in this post, we can potentially save additional cost with lower rates.

The more difficult initial estimation is perhaps data costs due to the fact that these are often less intuitive to calculate prior to experimentation with running the test plan. Only the upload portion of data transfer (i.e., outgoing from AWS) is billable. There are many ways to calculate a data upload estimate, but one way is to approximate the per-request data upload and multiply it by the number of anticipated requests. For our example, let us use 700 bytes (small upload numbers are typical for an HTTP GET request). If our test is designed to run approximately 200,000 requests per load agent over the course of one hour, then we can anticipate about 140kB per load agent over the same time. Since we have three load agents, we can anticipate approximately 420kB of data upload per hour for our test. The calculation for this is as follows:

700B x 200,000(req.) x 3 = 420kB

|

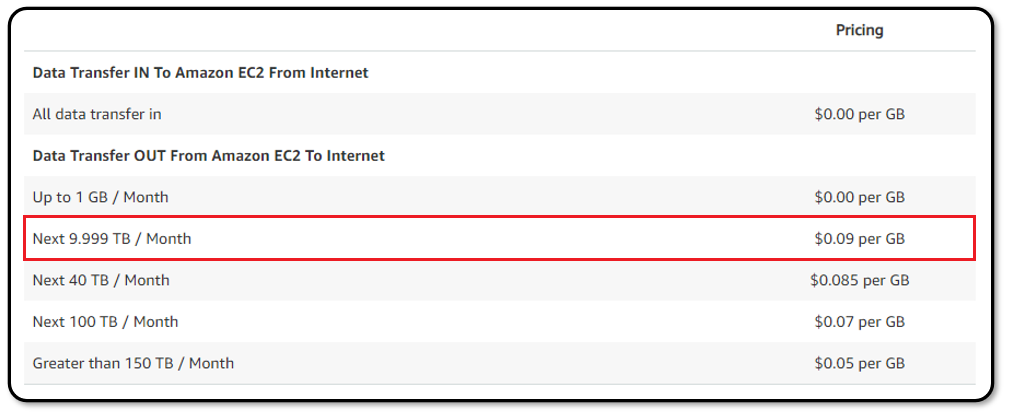

Our cost estimation algorithm is based on current published data transfer rates. We do however make assumptions that will report close to the maximum AWS charge you can expect (as described above). To better understand this, let us examine the actual AWS-published pricing tables for the US-East (Ohio) and US-East (N. Virginia) regions:

Assuming outbound traffic to a non-AWS target, a large percentage of AWS customers will fall into the highlighted row. The rate for this type of data transfer is 9 cents per GB. Since we intend to upload far less than 1GB, our data cost will be negligible. We can still calculate it according to the highest pricing tier as follows:

0.00042GB x $0.09 = $0.0000378

|

The data cost in this scenario can be ignored when adding these two figures together, we arrive at:

$0.201 + $0.0000378 ≈ $0.201

|

Therefore we can expect our test to cost approximately 20 cents or less.

Estimating an actual test

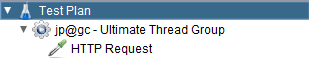

Similar to above, let us walk through an actual test case. Being by far one of the most popular frameworks used on RedLine13, we will start off with a simple JMeter test plan. We’ll keep the architecture very basic, and place a single HTTP Request Sampler inside a thread group:

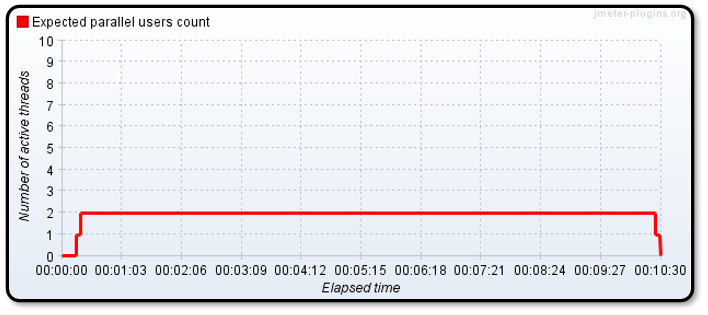

To generate some significant load, we have opted to use the Ultimate Thread Group plugin for JMeter. This allows us to set a constant thread count that will continuously bombard our single endpoint with requests:

The Ultimate Thread Group plugin allows us to precisely design a load profile vs. time.

Back on RedLine13, we will run this test using two m3.medium load agents. The cost for these is nearly identical as discussed above:

$0.067 x 2 x 1(hr) = $0.134

|

We anticipate our load agents will generate approximately 5,000 combined requests per hour. Each request is expected to send 2kB and receive 20kB. Since only outgoing data is billable, our test is expected to generate cost on about 10MB data. To be conservative we’ll estimate 1 cent towards the first billable gigabyte of data. Adding our estimated figures together, we expect our total cost to be the following:

$0.134 + $0.01 = $0.144

|

Running an actual test

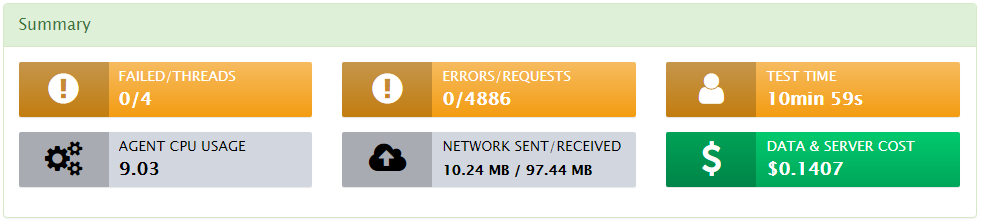

Now let’s run our test and see how close we have come to estimating our true AWS costs! Starting a new test in the US-East (N. Virginia) region using two m3.medium load agents and our JMeter test, here are the results:

Our predicted estimated spend was $0.144 and our actual estimate was approximately $0.1407. At a disagreement of a mere third of one cent, that is pretty close to what we predicted.

Some notes on AWS cost estimations

Amazon publishes multiple tools for calculating how much it charges. These include the AWS Cost Calculator, the AWS Simple Monthly Calculator, among other publications for determining special pricing rules. Cost estimation is fairly straightforward as it pertains to load agent instances, as it is dependent primarily on the instance size and type, test duration, and data transmitted. If you know all of these variables ahead of time, you can probably make near-exact predictions on how much your load test will cost.

Cost estimation breakdown

Server costs are incurred from running load agents (EC2 instances) and are represented by the rate that AWS charges for each actual load agent for the duration of the test. The other component of costs take the form of data transfer charges. Even though cost tables are reported on a per-hour basis, AWS bills EC2 pro-rates servers on a fractional hour basis (either per-minute or per-second). Our estimation very conservatively uses the per-hour basis, rounded up to the next hour. Data cost estimation follows a similarly conservative philosophy. While it theoretically would be possible to trace all traffic sent and received to each individual load agent, we make some simple assumptions and treat all data as if it were billed at the highest cost tier.

What will you save?

You’ll have to try it for yourself, but here’s a post about a company that saved 90% compared to their costs when using BlazeMeter. We can’t guarantee that you’ll save that much so we encourage you to try for yourself.

Try it out!

Sign up for a free trial account and start exploring RedLine13 today!

RedLine13 has a pricing model that is unique and significantly saves companies, big and small, compared to any other load testing platform. Here’s how it works.